What’s All the Fuss about AI Safety?

AI risks mapping from the Big Innovation Centre ahead of the UK AI Safety Summit 2-3 November 2023.

While Artificial intelligence (AI) can potentially increase efficiency, accessibility, safety, justice, and innovation, among other advantages, the UK’s AI Safety Summit was set up due to growing AI safety concerns to consider while employing AI; here are twelve of them, all of which are tied to both human and technological safety concerns.

- Autonomy with unclear accountability: As artificial intelligence grows increasingly independent and becomes more complex, issues arise regarding who is responsible for its decisions and acts. This makes it difficult to hold people or organisations accountable for unfavourable results caused by AI use. The classic use-case is, who is responsible if a self-driving car causes an accident?

- Loss of human control: As AI becomes more independent and makes its own decisions, there is concern that human control may be lost. For example, overriding an AI system’s decision that opposes or contradicts fundamental human principles or ethics may be tricky. For instance, in a medical setting, if an AI suggests a treatment plan that goes against a patient’s strongly held beliefs, a healthcare provider may face a difficult decision on whether to follow the AI’s recommendation or prioritize the patient’s values.

- Unintended consequences: The unforeseen implications of AI systems may be difficult to predict. For example, an AI system built to maximise some features may unintentionally harm the environment, other aspects of society, or the world in which we live.

- Job displacement and irrelevance: Artificial intelligence, and especially generative AI, has the potential to automate many occupations, leading to significant employment displacement and economic instability. This potential human irrelevance can also cause loss of traditional skills and knowledge.

- Effect on human relationships: As AI becomes more prevalent in our lives, concerns concerning its influence on human relationships arise. Individuals’ capacity to create meaningful connections with other humans may suffer if they increasingly rely on AI-powered helpers or companions. Thus, the AI-driven takeover of social interaction may lead to a genuine diminishment of human connections and life experiences.

- Homogenisation and lack of cultural diversity: AI algorithms, and data sourcing from training data tend to favour stereotypes or popular/mainstream content, leading to a homogenisation of culture and expression and potentially stifling diversity. In other words, AI systems may inadvertently generate content that misrepresents certain cultures, leading to cultural insensitivity or offence.

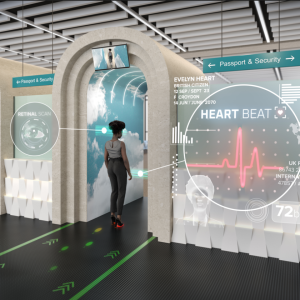

- Privacy and security: AI systems may collect and analyse vast amounts of data, raising concerns about privacy and security. This material can potentially harm individuals or organisations if it falls into the wrong hands.

- Transparency and explainability issues: Several AI algorithms are called “black boxes,” implying that knowing how they make decisions is difficult or impossible. This lack of transparency and explainability may make recognising and correcting biases, errors (or lack of precision in execution), or other ethical issues difficult.

- Discrimination and bias: AI systems can be trained with un-representative or biased data, producing discriminatory results. Face recognition algorithms, for example, have been shown to be less accurate for those with darker skin tones, potentially leading to discrimination.

- Inequality and social justice: AI has the potential to aggravate current disparities and social justice concerns. For instance, if AI algorithms are used to determine employment or lending choices, they may perpetuate prejudices and discrimination.

- Misinformation, manipulation and deep-fakes: Artificial intelligence (AI) can be used to alter public opinion or spread misinformation. AI-powered bots, for example, might be used to spread disinformation or exaggerate fake news. Furthermore, deep fakes involve the use of artificial intelligence to create highly convincing, but entirely fabricated, audio, video, or images leading to privacy violations.

- Generative AI has an IP problem: Companies train AI tools using data lakes containing thousands, or even millions, of unlicensed works. Cleverly, it can blend endless sources. Is this a matter of moral rights being infringed upon or simply an issue of an outdated IP system, or perhaps both?

AI Safety

It is critical to guarantee that AI is created and utilised responsibly and ethically to maximise the advantages while limiting dangers and potential negative consequences. Thus, AI systems must be developed and deployed responsibly, transparently, and accountable to promote the well-being of individuals, businesses and society.