Ensuring AI-Safety Amidst Market Rush: Strategies to Avoid Common Pitfalls

Top-12 Tips from Big Innovation Centre

Why the AI Market Rush

In the swiftly evolving digital economy, being the first mover is crucial, driven by the “winner takes all” principle. Industries relying on network effects, like social media, online marketplaces, and data-driven AI platforms, become more valuable as they attract more users, creating a self-reinforcing cycle that makes it difficult for competitors to catch up. Dominant players often control critical distribution channels, acting as significant barriers for new entrants. Market dominators with extensive reach can shape industries, consumer preferences, ethical norms, and entire societies. Hence, it’s vital for policies and regulations to ensure that competition and market choices remain diverse, preventing market distortions and inefficiencies.

First-mover advantages in market capture were famously set out by Joseph Schumpeter, an innovation economist writing about ‘creative destruction’ (which describes the process of innovations replacing older technologies and business models, leading to economic progress). Whereas first-mover advantages were typically associated with catching up by the rest of the businesses in the industry, the networked economy is different due to its ‘winner takes all principle’, as explained by business-economic theorist Professor Brian Arthur from Santa Fe Institute, USA.

In several interviews, Bill Gates, co-founder of Microsoft, also acknowledged the importance of being the first mover in the digital network era. He recognised that establishing a dominant position early on provides a significant advantage in the highly competitive tech industry.

However, AI stands out as different from previous technology revolutions because of its immersive and human-centric nature. Unlike some earlier technologies that may have been more practical or limited in their scope, AI has the potential to interact with humans in a more natural and intuitive way, leading to a more integrated and seamless integration into our daily lives and activities. It can also control decisions taken for us through our ‘fusion’ with AI technologies. Therefore, it’s crucial to prioritise safety and security amid this market rush.

To avoid sidelining safety and security in the race to market, consider these strategies around fostering a culture of safety and security and establishing a tradition of robust quality assurance.

Fostering a Culture of AI Safety and Security:

Creating a culture of safety and security requires a commitment from leadership. This entails tangible actions and decisions by top management that showcase an unwavering dedication to safety and security. This commitment should be ingrained in the company’s values and consistently communicated across all levels of the organisation. However, effectively communicating the company’s values regarding AI safety necessitates awareness raising and even training initiatives.

1) Employee Training and Awareness:

AI workshops and AI training programs should be implemented continuously. These sessions should not only educate employees about AI possibilities, AI safety issues and AI security protocols but also instil a sense of personal responsibility in each team member.

2) Integrating Safety and Security in Early Product Development:

Proactively embedding safety and security measures in product development focuses on designing products with built-in safeguards. This involves considering potential risks and vulnerabilities right from the outset during the brainstorming and design phases. This approach ensures that safety and security are not viewed as add-ons or afterthoughts but as integral components woven into the very essence of the product or service. Moreover, by incorporating these considerations early on, developers can create a more robust and trustworthy product that is better prepared to tackle unforeseen challenges in the future. This approach also nurtures a culture of responsibility within the development team, fostering a mindset where every member takes ownership of the safety and security aspects of the product. Developers must demonstrate a commitment to delivering a product that prioritises user well-being and upholds ethical standards.

3) Conducting Comprehensive Risk Assessments:

Identifying potential AI risks involves a systematic checklist exercise to ensure that all AI safety concerns are addressed. The AI checklist encompasses a range of critical topics, including AI’s impact on organisational accountability and product accountability, human oversight, unforeseen consequences, job displacement, human relationships, cultural diversity, privacy risks, transparency challenges, bias considerations, social inequality, data governance, and intellectual property concerns.

4) Promoting Cross-Functional and Interdepartmental Collaboration:

Fostering cross-functional collaboration through interdepartmental communication is imperative for ensuring safety and security in product development. It goes beyond mere information sharing, creating an environment where various departments collaborate, pooling their expertise and insights to address safety and security concerns collectively. For instance, in a software development company, the engineering team may excel in building robust code while the legal team comprehends compliance requirements and potential legal pitfalls. By promoting collaboration, these teams can work together to ensure that the software not only functions effectively but also adheres to all relevant legal and regulatory standards. This may involve incorporating specific data protection measures or ensuring the software meets industry-specific security certifications. The benefit of this collaborative approach is that it leads to a more comprehensive and well-rounded solution. Each department brings unique perspectives and expertise, contributing to a holistic safety and security strategy.

5) Staying Informed and Adhering to Industry Standards and Regulations:

Staying informed entails proactively keeping abreast of the evolving landscape of industry-specific regulations and standards. It requires a dynamic approach, ensuring that the organisation not only meets current requirements but is also prepared for future changes. For instance, in today’s AI landscape, regulations and policies are rapidly evolving. Ways to stay informed include participating in information round tables associated with policy and regulation, such as the All-Party Parliamentary Group on Artificial Intelligence (APPG AI).

6) Setting Realistic Timelines and Milestones:

The key is to avoid rushed deadlines and establish realistic timelines for market rollout. This means allowing adequate time for thorough safety and security assessments without compromising the quality of the final product or service. It also entails thorough testing and often phased market rollout.

7) Prioritising Executive or Board Accountability:

Clear reporting structures to the executive board should encompass not only financial matters but also extend to the field of AI. Leaders of companies and public sectors must understand how AI is reshaping the organisation and affecting products and services while making critical decisions regarding the adoption of AI technologies like robotics, machine learning, and facial recognition into products and operations. AI safety extends beyond tangible safety concerns (such as traditional fire safety) and encompasses ethical topics and challenges tied to AI’s impact on business, society and humanity. Establishing an ethics board is a common practice to navigate these complexities. Prioritising a robust framework for reporting AI safety concerns is crucial for enforcing corporate accountability.

Establishing a Tradition of Robust Quality Assurance:

Establishing a tradition of robust quality assurance involves both a comprehensive internal evaluation of AI security measures and engaging external experts and auditors, such as the British Accreditation Service and industry best practice standards communicated by the British Standards Institution. Finally, it includes post-market surveillance and monitoring, for example, from watchdogs or user feedback.

8) Implementing Internal Robust Testing:

Rigorous and thorough testing of AI-enabled products is not just about functionality; it’s also about ensuring they meet the highest AI safety and performance standards before being released to the market. For example, in the autonomous vehicle industry, internal testing involves simulating various real-world scenarios to ensure the AI system can make split-second decisions to avoid accidents.

9) Engaging External Experts:

Seeking external perspectives through independent accreditors, such as the UK Accreditation Service, is invaluable. It provides an unbiased evaluation of safety and security measures, often yielding fresh insights and recommendations for improvement, and it builds credibility among stakeholders and market standards.

Consider the case of a financial institution implementing AI for fraud detection. It’s crucial to ensure that the algorithms don’t mistakenly label valid transactions as fraudulent. Similarly, when making lending decisions, algorithms must avoid discrimination based on biased assumptions. These are areas that accreditation bodies may thoroughly investigate.

10) Soliciting Feedback from Stakeholders:

Beyond internal assessments, actively seeking feedback from customers, employees, and other stakeholders is crucial. They often provide unique perspectives and can uncover potential AI safety and AI security concerns that may take time to become apparent. This can include experiences with AI-powered chatbots in a retail setting.

11) Conducting Post-Market Surveillance and Monitoring (e.g., Watchdogs):

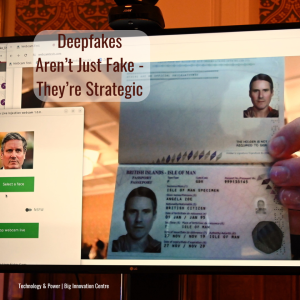

Once AI-enabled products or services are in the market, ongoing monitoring is essential to swiftly identify and address any emerging safety or security issues that may arise in real-world usage. AI watchdogs can do this for (I) regulatory oversight to ensure compliance with laws, regulations, and industry standards, (II) user protection by focusing on ethical considerations of the impact of AI adoption within organisations or markets, (III) social justice by focusing on monitoring for discrimination, inequality, and other violations of AI adoption, advocating for fairness and inclusivity. (IV) This also includes how data is sourced and used. (V) The implementation of face recognition provides a pertinent example of when a watchdog becomes essential. This ensures a clear understanding of the intended purpose behind face recognition cameras and how the associated data is utilised.

(VI) Watchdogs can also utilise AI. For example, in the financial industry, regulatory bodies could use AI to monitor AI-driven trading algorithms to ensure they don’t engage in market manipulation or insider trading.

12) Staying Informed about Emerging Risks, Technologies and Best Practices:

In the ever-evolving landscape of AI technology development, staying updated is a must. Continuous learning about new opportunities and potential risks of emerging technologies and ‘best practices’ in their adoption ensures that developers, companies, and researchers develop capabilities to be adaptive and well-prepared to implement changes and new safeguards. For example, in the healthcare sector, staying informed about emerging AI technologies can help adopt state-of-the-art diagnostic tools while ensuring patient data privacy and security are maintained at the highest standards.

Strategies to avoid common pitfalls when ensuring AI safety and security amidst market rush aren’t just about risk mitigation. It is about building trustworthy and credible organisations for all stakeholders in business and society, ultimately contributing to sustained success, enhanced life experiences with AI, increased welfare and ethical responsibility.