Data protection and anti-discrimination must be guaranteed in the deployment of Face and Emotion Recognition Technologies. A clear regulatory framework and oversight body is required to ensure fair and safe use.

Above all, an ongoing dialogue with the citizens about the benefits and risks of data-driven surveillance technologies, especially when used by law enforcement and other public authorities, should be maintained. Transparency is paramount.

These were the arguments presented at the All-Party Parliamentary Group on Artificial Intelligence meeting (6 June 2020), which met to review how AI-driven face and emotion recognition technologies will change public and private life in the future.

The APPG AI Evidence Meeting brought together experts from a range of diverse fields, including technology, media and security.

To view photos from the meeting, scroll using the right and left arrows when you hover over the picture.

A consolidation of Experts

Joined by:

Matthias Spielkamp (Co-Founder and Executive Director, Algorithm Watch), Silkie Carlo (Director, Big Brother Watch), Professor Nadia Bianchi-Berthouze (Professor & Deputy Director of UCL Interaction Centre), Dr Temitayo Olugbade (Research Fellow at UCLIC), Dr Seeta Peña Gangadharan (Assistant Professor in the Department of Media and Communications, LSE), Matt Celuszak (CEO, Element Human), and Andrew Bud CBE (CEO and Founder, iProov).

The meeting was chaired by Stephen Metcalfe MP and Lord Clement-Jones CBE. Big Innovation Centre is the Secretariat of the APPG AI, led by Professor Birgitte Andersen (CEO), and the Rapporteur for the meeting is Dr Désirée Remmert.

Face and emotion recognition deployment must guarantee data protection and not discriminate against citizens: A clear regulatory framework is required.

Summary of the findings

The evidence presented at the meeting suggests a clear regulatory framework is required for face and emotion recognition technology to be used responsibly. This framework should (i) ensure the quality and applicability of data sets used for the training of technologies, (ii) regulate audits and compliance checks, and (iii) outline rules for the collection, processing, and storage of citizens’ biometric data for public and commercial use.

The evidence presented at the meeting suggests a clear regulatory framework is required for face and emotion recognition technology to be used responsibly. This framework should (i) ensure the quality and applicability of data sets used for the training of technologies, (ii) regulate audits and compliance checks, and (iii) outline rules for the collection, processing, and storage of citizens’ biometric data for public and commercial use.

– Face and Emotion Recognition Technologies must be representative and relevant for their specific purpose of use. This is in order to guarantee accurate results that do not replicate inherent biases and thus reinforce societal prejudices and perpetuate systematic discrimination of individuals and groups. In order to achieve this, collected data should be regularly revised and adjusted, and a person should be ‘in the loop’ to ensure data is free of bias.

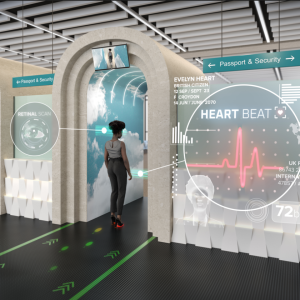

– Technologies must be used in a way that protects citizens’ privacy and they must not reinforce societal prejudices or exploit vulnerable groups and individuals.

– Face and Emotion Recognition Technologies must be regularly audited to guarantee their safety and to check if they are still fulfilling the desired purpose.

– Public and private institutions and businesses must be transparent about (i) where Face and Emotion Recognition Technologies are deployed, (ii) what kind of data they collect, and (iii) how they are processed and stored. A public debate with citizens and stakeholders around the democratic implications of these technologies should be encouraged.

– The different types of face and emotion recognition technologies each come with specific sets of risks that should be considered in the design of a national regulatory framework. Data generated by these technologies may need to be assessed by a human for contextualisation and accuracy.

To continue reading

View the full recording of the meeting here

A 130 minutes full recording of the APPG AI Evidence meeting: Face and Emotion Recognition

APPG AI Sponsors

This showcase is an output of the All-Party Parliamentary Group of Artificial Intelligence (APPG AI)

This showcase is an output of the All-Party Parliamentary Group of Artificial Intelligence (APPG AI)

The sponsors

Blue Prism, British Standards Institution, Capita, CMS Cameron McKenna Nabarro Olswang, Creative England, Deloitte, Dufrain, Megger Group Limited, Microsoft, Omni Telemetry, Oracle, Osborne Clarke, PwC, Rialto Consultancy and Visa

enable us to raise the ambition of what we can achieve.

Big Innovation Centre is the, appointed by UK Parliament, Secretariat for APPG AI.