Technology and Power — Essay Series

How emerging technologies reshape economic power, governance and global competition.

Scaling AI with Trust: Finance, Insurance and the Future of Institutional Power

The core issue

Artificial intelligence is already embedded in finance and insurance.

The strategic question is no longer adoption — but whether AI can scale in ways that strengthen trust rather than undermine it. This will determine not only competitiveness, but the future resilience of financial systems.

Power implications

- Trust is becoming core infrastructure for financial AI

- Governance now shapes competitiveness

- Scaling responsibly is the UK’s strategic advantage

Introduction: beyond adoption

Artificial intelligence is no longer an emerging technology for finance and insurance. It is already embedded in how risk is assessed, fraud is detected and customers are served. Across banking, insurance and regulatory systems, AI is becoming part of the operational infrastructure of financial services.

The central challenge has therefore shifted. The question is not whether artificial intelligence will transform financial services, but whether it can scale in ways that reinforce public trust and institutional accountability.

Scaling artificial intelligence without scaling trust risks undermining the very systems it is intended to improve.

These reflections draw on recent parliamentary discussions, including an APPG AI roundtable on artificial intelligence in finance and insurance held in the UK Parliament, together with wider expert dialogue across research, industry and policy communities on how artificial intelligence can be scaled responsibly within trusted financial and insurance systems.

The future competitiveness of the UK’s financial sector will depend not simply on adopting AI, but on scaling it responsibly within accountable institutional frameworks.

Innovation within accountable systems

A distinctive feature of the UK approach to AI in financial services is its emphasis on outcomes and accountability rather than technology-specific regulation. Existing regulatory frameworks already place responsibility firmly on firms that adopt and deploy AI (banks, insurers, financial institutions) for the outcomes produced by the systems they deploy, regardless of how automated those systems become.

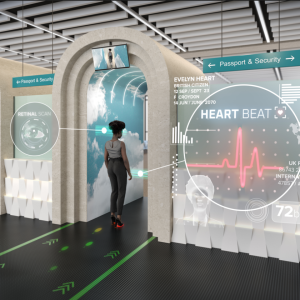

This approach recognises that the risks associated with AI in finance are not abstract. They shape lending decisions, insurance premiums, fraud detection and access to essential financial services. As decision-making becomes increasingly automated, the regulatory challenge is therefore institutional rather than purely technical: ensuring accountability remains clearly anchored even as technological complexity increases.

Testing environments and regulatory sandboxes are proving critical in enabling this balance. They allow these firms to experiment with new AI-enabled models while ensuring risks are identified and managed early. This pragmatic and iterative approach is emerging as a competitive strength, allowing innovation to proceed without eroding trust.

Productivity gains require governance

The economic case for AI adoption across financial services is compelling. Persistent productivity challenges across compliance, customer service and risk management have created strong incentives for automation and advanced analytics.

Yet technological capability alone does not deliver transformation. Artificial intelligence can accelerate processes and enhance insight, but it requires robust systems engineering, governance frameworks and skilled human oversight. The most effective deployments are those that treat AI as an augmentation of professional expertise rather than a replacement for it.

Clear lines of accountability, explainability and human-in-the-loop decision-making remain essential – particularly when automated systems influence customers’ financial lives. Productivity gains from artificial intelligence are therefore not simply a function of technological adoption but of institutional readiness and governance capacity.

Insurance and the widening protection gap

In insurance, artificial intelligence is reshaping how risk is understood and managed. Advanced analytics and predictive modelling offer the potential to improve underwriting accuracy, accelerate claims processing and identify emerging risks earlier.

More responsive and preventative insurance models could help narrow the widening protection gap between the risks individuals and businesses face and the coverage available to them. However, the same tools that enable greater precision also raise new questions about fairness, transparency and accessibility.

Automated underwriting and claims systems must be subject to rigorous bias testing, and customers must be able to understand and challenge decisions that affect them. Responsible adoption therefore depends not only on technological capability but on strong ethical and governance frameworks embedded from the outset.

From experimentation to scalable adoption

Across financial services, the primary challenge is no longer identifying use cases for artificial intelligence but scaling them effectively. Many viable applications already exist, from automating complex documentation processes to enabling new forms of predictive risk analysis.

However, scaling these innovations requires supportive infrastructure: access to high-quality data, skilled talent and growth capital. Structural constraints (particularly the UK’s persistent scale-up finance gap) remain barriers to translating domestic innovation into global leadership.

Without sustained investment in infrastructure and skills, promising applications may remain confined to pilot stages rather than achieving systemic impact.

Artificial intelligence is becoming part of the institutional infrastructure on which financial trust depends.

Trust as strategic infrastructure

Across regulatory, banking, insurance and technology perspectives, a consistent principle is emerging: trust must be treated as core infrastructure for artificial intelligence adoption.

Responsible deployment requires systems that can be audited, monitored and challenged, alongside governance frameworks that ensure accountability remains clear even as automation increases. Trust, in this context, is not a reputational concern but an operational requirement.

The UK’s competitive advantage is unlikely to come from dominating the development of foundational AI models. Instead, it lies in demonstrating how artificial intelligence can be deployed responsibly within highly regulated sectors where reliability and public confidence are paramount.

Governance as competitive advantage

This reframes the relationship between regulation and innovation. Rather than acting as a constraint, effective governance can function as an enabler of sustainable adoption. Firms and jurisdictions able to demonstrate reliable, accountable and transparent deployment of AI will be better positioned to scale innovation and attract investment.

The strategic choice ahead is therefore clear. Policymakers must support skills development, data access and open finance frameworks. Regulators should continue developing practical tools and sandboxes for testing and assurance. Industry leaders need to prioritise accountability and real-world impact alongside technological capability.

Conclusion: scaling with trust

Artificial intelligence is already reshaping finance and insurance. Whether it leads to greater inclusion, resilience and productivity – or to new risks and inequalities – will depend on how it is governed and deployed.

Scaling artificial intelligence with trust is not a constraint on innovation.

It is the foundation on which sustainable innovation and institutional legitimacy will depend.

Technology and Power — Essay Series

This series explores how emerging technologies reshape economic structures, governance systems and global power relations.

Professor Birgitte Andersen is Professor of the Economics and Management of Innovation and leads research on the political economy of emerging technologies.