Technology and Power — Essay Series

How emerging technologies reshape economic power, governance and global competition.

AI Moves into the Real World: Power, Infrastructure and the Next Phase of Governance

The core issue

The next phase of artificial intelligence will not be defined by ever more capable chatbots.

It will be defined by systems that understand, act in and shape the real world.

This marks a shift from information technology to infrastructure technology — and from digital convenience to structural power.

Power implications

- From language systems to real-world systems

- From software tools to operational infrastructure

- From model performance to governance of deployment

Introduction: beyond chatbots

Much public debate about artificial intelligence continues to focus on the rapid evolution of large language models (LLMs) and conversational systems. Yet across research, industry and policy communities, a broader transition is underway. Artificial intelligence is moving beyond language and into physical environments, decision systems and critical infrastructures.

The next phase of artificial intelligence will be defined not by more capable chatbots, but by systems that act in the real world.

This transition represents more than a technical advance. It signals a structural shift in how technological power is exercised and governed. As AI becomes embedded in the systems that organise production, services and everyday life, the question is no longer simply how intelligent these systems become, but how they are integrated into real-world environments and institutions.

These reflections draw on recent parliamentary discussions, including an APPG AI roundtable on AI horizon scanning held in the UK Parliament, alongside wider expert dialogue across research, industry and policy communities on how artificial intelligence is moving from information processing into operational systems.

The future trajectory of artificial intelligence will be shaped less by scale alone than by how responsibly and strategically it is deployed within real-world systems.

From language to world models

Current policy frameworks often assume that future artificial intelligence will resemble more powerful versions of today’s language-based systems. However, growing expert consensus suggests that the next phase of development will centre on systems capable of modelling and interacting with physical reality.

Language models operate on text. The real world consists of environments, signals, sensor data and complex dynamic systems. Artificial intelligence capable of reasoning about such environments will expand its usefulness across robotics, infrastructure management, healthcare and industrial production.

At the same time, systems that act in the world cannot simply be “reset” when they fail. Errors may have material consequences, affecting safety, economic performance and public trust. Governance will therefore need to evolve from managing information input and outputs to overseeing real-world actions. That is, AI governance can no longer focus only on data and content; – it must focus on what AI systems actually do in the real world.

Who shapes the AI that shapes society?

As AI systems become embedded across devices, platforms and services, they will increasingly mediate how individuals access information, make decisions and interact with institutions. This creates structural questions about control and ownership.

If a small number of global platforms dominate AI infrastructure, they will inevitably influence which languages, values and perspectives are prioritised. Over time, this may narrow cultural and informational diversity even without deliberate centralisation.

Supporting open and interoperable AI ecosystems therefore becomes a strategic policy choice rather than a purely technical one. Open and distributed models enable regions and organisations to adapt systems to local contexts, supporting technological sovereignty and institutional resilience. Openness in artificial intelligence increasingly becomes a matter of economic and political strategy.

Infrastructure, energy and computational power

The convergence of artificial intelligence with advanced computing architectures, including quantum and photonic technologies, adds further strategic significance. As computational demand and data-centre energy consumption rise, infrastructure capability becomes a core determinant of economic competitiveness.

More efficient computing architectures and specialised accelerators will shape national capacity to sustain large-scale AI deployment. Data stewardship, computational resilience and energy-efficient design must therefore be treated as elements of industrial and economic policy rather than purely technical considerations.

The governance of artificial intelligence increasingly intersects with the governance of infrastructure itself.

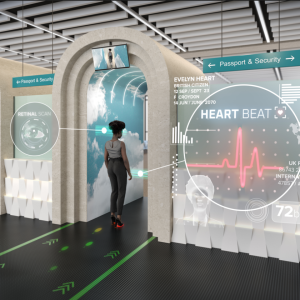

Agentic AI and operational governance

A more immediate transformation is the emergence of agentic artificial intelligence: systems capable not only of generating outputs but of initiating and coordinating actions.

In industrial and organisational environments, such systems are already issuing work orders, triggering processes and coordinating operations. Artificial intelligence in these contexts becomes part of operational infrastructure rather than a stand-alone tool.

This shift changes the nature of risk. The central concern is no longer only that an AI system might produce an incorrect answer, but that it might take an incorrect action. Governance must therefore be embedded directly within system architecture.

Key design principles are emerging:

- clearly bounded authority for AI systems

- strong auditability and observability

- robust rollback and override mechanisms (i.e., systems must be designed so that humans can quickly stop, reverse, or take control if an AI makes a wrong decision or behaves unexpectedly)

- protection against manipulation and malicious inputs

Operational governance becomes inseparable from system design.

Artificial intelligence is shifting from a tool of information to an infrastructure of real-world power.

Decision intelligence and complex systems

The next frontier lies in AI systems capable of reasoning over time and supporting complex decision-making. Rather than simply predicting outcomes, such systems evaluate trade-offs, model uncertainty and recommend courses of action within dynamic environments.

Applications in healthcare, infrastructure and public policy illustrate this potential. Simulation environments and digital twins can enable systems and policies to be tested before deployment, supporting improvement without real-world risk. Continuous monitoring and adaptive learning may allow governance to evolve from one-off approval processes to ongoing assurance.

Artificial intelligence thus becomes a tool not only for optimisation but for managing complexity itself.

Synthetic data and the future of evidence

The expansion of synthetic data introduces new opportunities for innovation alongside new governance challenges. Properly generated synthetic datasets can reduce privacy risks, improve representation and enable experimentation where real-world data is limited or sensitive.

However, their effectiveness depends on robust generation methods and quality controls. Questions regarding copyright, provenance and legal accountability remain unresolved. Without clear frameworks, synthetic data may become both a driver of innovation and a source of new constraints.

Governance must therefore address not only how data is used, but how it is generated.

Governance must focus on deployment

Across emerging expert consensus, a central principle is becoming clear: governance should focus on deployment and use rather than attempting to restrict research itself. Overly restrictive controls on development risk slowing open scientific progress and reinforcing the dominance of the largest global actors.

By contrast, robust assurance mechanisms (including auditing, benchmarking and post-deployment monitoring) address risks where they arise: in real-world applications. This represents a shift from abstract debates about AI risk to practical governance of AI systems in operation.

The human question

The long-term resilience of artificial intelligence adoption will depend as much on human capability as on technological design. Education, professional training and institutional capacity will shape whether societies can effectively govern increasingly complex systems.

Building public and professional capability to understand, question and audit AI will be essential for sustaining trust. Without such capacity, even well-designed systems may struggle to achieve legitimacy.

Conclusion: AI as infrastructure of power

Artificial intelligence is entering a new phase. The trajectory is moving:

- from language → to real-world understanding

- from passive tools → to active systems

- from isolated applications → to interconnected infrastructures

As this transition unfolds, AI will increasingly function as infrastructure shaping economic performance, institutional capacity and geopolitical balance.

If a country relies heavily on AI systems built elsewhere, its economy may depend on external platforms, its data may flow abroad, its institutions may use tools shaped by others’ values, and its industries may be vulnerable to external control. Scaling artificial intelligence responsibly will not slow innovation. It will determine whether innovation delivers durable economic and societal value.

Technology and Power — Essay Series

This series explores how emerging technologies reshape economic structures, governance systems and global power relations.

Professor Birgitte Andersen is Professor of the Economics and Management of Innovation and leads research on the political economy of emerging technologies.